An Introduction to Perplexity

And its application on the evaluation of Language Models

In the last blog post, I talked about Large Language Models and their limitations in terms of language. But what kind of methods exist out there to measure how well a Language Model actually performs? You train it on a corpus and use a test set to employ the model in an actual task e.g. a machine translation and rate the accuracy of the result. Then there are two important categories of metrics that are used to evaluate the results:

Extrinsic Evaluation — Focuses on the performance of the final objective (i.e. the performance of the complete application)

Intrinsic Evaluation — Focuses on intermediary objectives (i.e. the performance on a defined subtask)

In the following, we focus on an important intrinsic evaluation method called perplexity. How is perplexity defined and what’s the conceptual meaning?

Let’s look at an example: Consider an arbitrary language L. We use English to simplify the arbitrary language. A Language Model acting on L is basically a probability distribution1, which assigns probabilities to sequences of arbitrary symbols, where a higher probability indicates a higher chance of the sequence’s (w₁,w₂,…,wₙ) presence in the language. Such a symbol could be a character, a word, or even a sub-word (for instance, the word 'going' can be split into two sub-words: 'go' and 'ing'). Typically, language models calculate this likelihood as the cumulative product of the probability of each symbol, given its predecessors.

How can we model this probability chain?

N-gram model

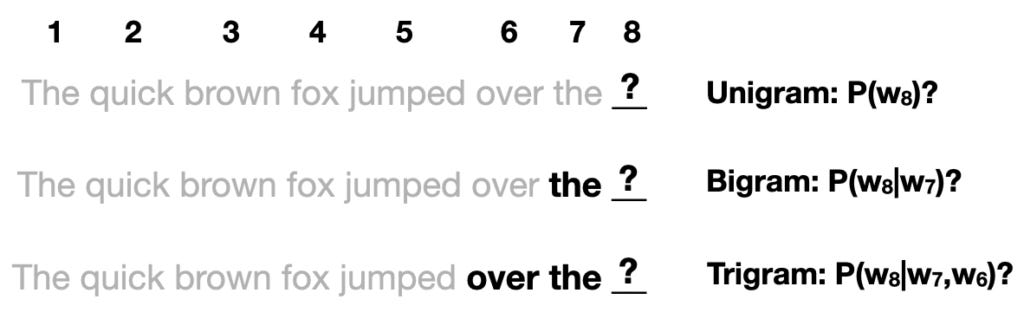

An n-gram model is a contiguous sequence of n items, in our case words, from a given sample of text. As the 'n' prefix suggests, there are different kinds of n-grams. Most commonly, a unigram represents a single word from the text, such as 'model', a bigram involves two consecutive words, for example, 'language model', and a trigram comprises three successive words, like 'advanced language model used'.

With the help of these n-gram models, we are able to consider the different probabilities used to predict the next word.

Now that we have explored how n-gram models utilize the probabilities of word sequences to predict the next word, it becomes crucial to understand entropy, a concept that quantifies the unpredictability or randomness of these predictions, hence directly influencing the performance of our language models.

Entropy

The goal of our arbitrary language L is to convey information. Entropy is a metric based on the branch of information theory to measure the average information density conveyed in a message. It was introduced by Claude Shannon2. While entropy in language shares some similarities with the concept of entropy in thermodynamics, the two concepts are not identical.

To better understand the notion of entropy in language, let’s reference Shannon’s seminal paper, "Prediction and Entropy of Printed English"3. He quotes:

The entropy is a statistical parameter which measures, in a certain sense, how much information is produced on the average for each letter of a text in the language. If the language is translated into binary digits (0 or 1) in the most efficient way, the entropy is the average number of binary digits required per letter of the original language.

Shannon proposes that the entropy of any language (denoted as H) can be computed by a function H(N) that quantifies the amount of information, spanning over N adjacent letters of text. Let bₙ denote a sequence of contiguous letters (w₁,w₂,…, wₙ):

Cross-Entropy

Cross-entropy is a closely related concept to entropy. While entropy measures the uncertainty or randomness inherent in a single probability distribution, cross-entropy measures the dissimilarity between two probability distributions. In a language model, one of these distributions is usually the true data distribution (the actual language), and the other is our model's predicted distribution. So it’s a direct performance measurement.

Analogous to Shannon's entropy, the cross-entropy between the true distribution P and our model's distribution Q over a sequence of N adjacent letters or words (w₁, w₂,…,wₙ) can be expressed as:

Perplexity

Finally, we come to derive at the point where we can define perplexity, often described as a way of quantifying the 'surprise' a language model experiences in predicting unseen data in a test set. Simply put, a lower perplexity implies that the model's predictions align more closely with the actual outcomes, making it less 'perplex' by new data.

Perplexity is defined by the following equation:

With this formula, perplexity is a monotonically increasing function of cross-entropy, meaning that the lower the cross-entropy (i.e., the closer our model's distribution is to the true distribution), the lower the perplexity, and thus, the better our model is at predicting unseen data.

Key Takeaways

Language models apply probability distributions to sequences of symbols (characters, words, or sub-words) in a language. These probabilities are typically calculated through n-gram models, which examine the likelihood of each symbol given its preceding context.

The concept of entropy, introduced by Claude Shannon, is central to understanding the performance of language models. Entropy measures the average information density in a message and, in a language model, quantifies the unpredictability of word predictions.

Cross-entropy extends the concept of entropy by comparing the divergence between two probability distributions: the true data distribution (actual language) and the model's predicted distribution. In a language model evaluation, lower cross-entropy implies closer alignment between the model's predictions and the actual language, which leads to a lower perplexity score.

Perplexity is a fundamental metric in evaluating language models, quantifying the 'surprise' a model experiences when predicting unseen data. Lower perplexity indicates better model performance, reflecting predictions that align more closely with the actual language.

Thank you for reading.

Sources:

https://thegradient.pub/understanding-evaluation-metrics-for-language-models/#:~:text=Traditionally%2C%20language%20model%20performance%20is,they%20perform%20on%20downstream%20tasks.

https://towardsdatascience.com/perplexity-intuition-and-derivation-105dd481c8f3

https://towardsdatascience.com/the-intuition-behind-shannons-entropy-e74820fe9800

https://www.deeplearningbook.org/contents/prob.html

https://towardsdatascience.com/the-most-common-evaluation-metrics-in-nlp-ced6a763ac8b

If you need a probability distribution refresher, I highly recommend reading this source: https://www.deeplearningbook.org/contents/prob.html

Claude Elwood Shannon. A mathematical theory of communication. Bell system technical journal, 27(3):379–423, 1948

https://www.princeton.edu/~wbialek/rome/refs/shannon_51.pdf